Prompt Engineering

BLOG

9 min read

Getting Started with Prompt Engineering: A Technical Guide for Developers

Quick Summary

Prompt engineering is the process of designing precise input instructions for large language models (LLMs) such as GPT-4, Claude, and Mistral to deliver accurate, structured, and reliable outputs. For developers, it functions as a programming interface where natural language replaces code to direct intelligent behavior. Whether you are building RAG pipelines, automating document extraction, or orchestrating multi-agent systems, prompt engineering remains a foundational skill for aligning AI models with business and technical goals.

As the adoption of large language models (LLMs) like OpenAI's GPT-4, Claude, and Mistral continues to accelerate, prompt engineering has become an essential discipline in the AI development lifecycle. It’s the craft of designing and refining input instructions to export accurate responses from language models.

But prompt engineering is more than just clever phrasing. If done right, it can become a programming interface to intelligence. This guide walks you through the foundational concepts, design patterns, and best practices for applying prompt engineering effectively in real-world technical applications.

How do you approach prompt engineering?

Let’s discussWhat is Prompt Engineering?

Prompt engineering is the practice of constructing inputs (prompts) that guide large language models to produce desired outputs with high reliability, accuracy, and structure. Instead of writing code, you're engineering language as input, and leveraging the latent capabilities of a foundation model trained on billions of tokens of data.

Prompt = Instruction + Context + Constraints

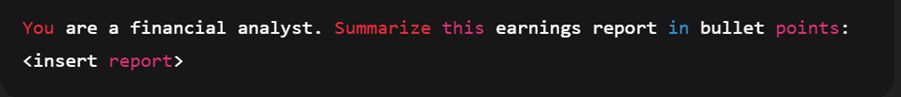

Example (simple): Role-based summarizations

Example (structured output): Data extraction

What Are the Core Concepts in Prompt Engineering?

At the heart of prompt engineering are a few foundational methods that determine how effectively developers can control LLM outputs. These concepts aren’t just tricks—they are emerging as industry-standard practices for aligning generative models with predictable behavior.

| Concept | Description | Industry Context |

|---|---|---|

| Role Prompting | Assigning the model a persona or role (“You are a financial advisor…”) to shape tone and authority. | Widely adopted in customer service chatbots, where agents act as bank tellers, tutors, or medical assistants. |

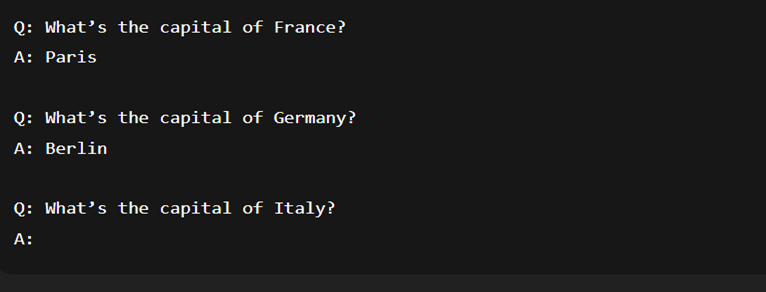

| Few-Shot Prompting | Supplying 1–3 examples to improve consistency and reduce ambiguity. | According to OpenAI’s developer documentation, few-shot prompting can reduce error rates by 20–30% in structured data tasks. |

| Chain-of-Thought Prompting | Asking the model to explain its reasoning step by step. | Research from Google (Wei et al., 2022) showed CoT prompts improved math and reasoning benchmarks by up to 40%. |

| Output Constraints | Enforcing structure (JSON, YAML, tables). | Critical in enterprise use cases like contract parsing or API orchestration where strict formatting is required. |

| System vs. User Prompts | System prompts set long-term context; user prompts execute tasks. | For example, ChatGPT’s “system” layer ensures compliance filters persist across sessions. |

Prompt-Driven Experimentation Setup

Building reliable prompt-driven systems requires a proper experimentation workflow. Developers often underestimate how much iteration is required before achieving stable performance. For dev team to get started with it, here’s what you need to prototype prompt engineering workflows:

Tooling Options

| Tool / Platform | Primary Use Case | Why It Matters |

|---|---|---|

| OpenAI Playground | Rapid prototyping & testing | Best for testing small variations quickly without writing code. |

| LangChain | Building modular prompt pipelines | Critical for applications needing multi-step logic or RAG. |

| LlamaIndex | Retrieval-Augmented Generation (RAG) | Helps integrate domain-specific data into prompts. |

| PromptLayer / Helicone | Logging, observability, versioning | Treats prompts like code, with version control and regression testing. |

| Jupyter Notebooks | Experimentation and documentation | Allows mixing of code + prompt results for reproducibility. |

According to a 2024 State of AI Engineering report by Anyscale, over 65% of LLM developers reported that prompt versioning and observability were the hardest challenges in scaling prototypes to production.

What Are the Common Prompt Design Patterns Every Developer Should Know?

Prompt design patterns are like software design patterns. They are reusable strategies for common challenges. Knowing when to apply each saves time and improves reliability.

1. Zero-Shot Prompting

When to Use: Minimal input, relying on model general knowledge.

Risk: May produce vague or inconsistent results

Example: Translate the following sentence into French: "Good morning, how are you?"

2. Few-Shot Prompting

When to Use: Structured outputs where examples clarify expectations.

Enterprise Example: Show examples to help the model infer format and tone.

3. Chain-of-Thought Prompting

When to Use: Encourage the model to reason out loud.

Example:

Q: A train leaves city A at 9:00 am traveling at 60 mph. City B is 180 miles away. When will it arrive?

A: Let's think step by step.

4. Instruction + Output Formatting

When to Use: Force structure using delimiters or schemas.

Example: Summarize the following article. Output should be JSON with "title", "key_points", and "conclusion".

According to a Microsoft research study (2023), chain-of-thought prompting improved reasoning accuracy in LLMs by up to 40% for multi-step tasks.

Scaling Prompt Engineering for Complex Workflows

As applications mature, developers often need multi-step orchestration and integration with external systems.

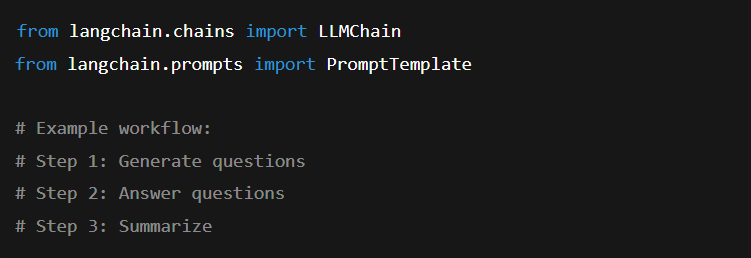

1. Prompt Chaining

Use the output of one prompt as the input to the next—common in multi-agent and tool-using systems.

Definition: Linking outputs of one prompt as inputs to the next.

Use Case: Research pipelines where a document is summarized → questions are generated → answers are synthesized.

Example Tools: LangChain, Semantic Kernel.

Industry Adoption: Multi-agent frameworks like AutoGPT and BabyAGI use chaining as the backbone of their workflows.

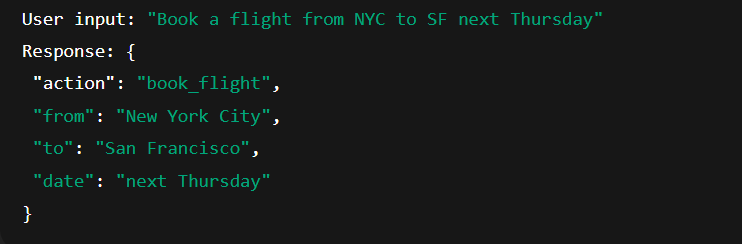

2. Function Calling (OpenAI, Claude)

Use LLMs to generate structured JSON that maps directly to API functions.

Definition: Instructing LLMs to return structured JSON aligned to an API.

Real-World Use: Travel booking assistants parsing requests into structured API calls.

Benefit: Eliminates “free-text ambiguity” and allows direct system integration.

Example: Return a JSON object describing the user's intent:

OpenAI reported (2023) that structured function calling reduced API invocation errors by 70% in production environments.

3. Debugging Prompt Failures

Prompt errors are common. Structured debugging can prevent wasted cycles. Use LLM-as-a-judge frameworks to automatically score and filter outputs. Best practice? Pair prompts with evaluation metrics. Tools like TruLens or LangSmith can benchmark LLM outputs against quality criteria like correctness and relevance.

| Symptom | Likely Cause | Fix |

|---|---|---|

| Hallucinated facts | Prompt is under-specified | Add role + verification rules |

| Inconsistent formatting | No explicit output constraints | Use JSON schema / delimiters |

| Repetition or cutoff | Prompt exceeds token limit | Use summarization / chunking |

| Wrong tone or style | Lack of role/context setup | Use “You are a…” system prompt |

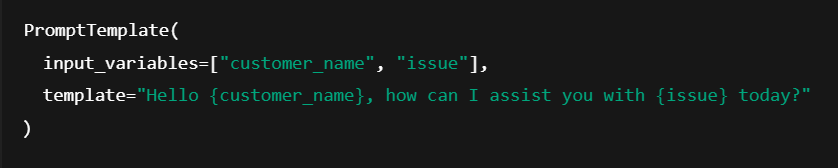

4. Packaging Prompts for Production

For production readiness, treat prompts like software artifacts.

Prompt Templates

Use string templates with parameters for consistent prompt generation.

Prompt Versioning & Observability

Track prompt changes like code:

- Use Git + YAML files

- Log outputs for regression analysis

- Tag prompts per use case or model version

Guardrails

Use output validators:

- Regex for format

- Pydantic / JSON schema for data type enforcement

- LLM-as-judge for subjective criteria

According to Scale AI (2024), enterprises deploying guardrails saw a 50% reduction in hallucinated outputs in production.

Recommended Prompts Libraries

These libraries help structure, debug, and scale prompt engineering. Many enterprises combine LangChain (for orchestration) + PromptLayer (for monitoring) to cover both development and observability.

- LangChain PromptTemplate – modular templates for chaining

- Microsoft PromptFlow – enterprise-grade prompt orchestration

- OpenAI Cookbook – practical reference examples

- PromptLayer – logging & analytics for production

- Guidance (Microsoft) – token-aware prompt building

Real-World Use Case of Prompt Engineering: Document Extraction Bot

Many enterprises still process NDAs and contracts manually. A document extraction bot powered by prompts can automate this.

Goal: Extract structured fields from NDAs and contracts using prompts only.

Problem: Companies spend millions annually on manual contract review for compliance, risk, and finance reporting. Legal teams waste time parsing repetitive clauses like jurisdiction or termination conditions.

Setup:

- Model: GPT-4 (fine-tuned on legal data)

- Prompt Strategy: Few-shot prompting with examples of NDA clauses

- Output Enforcement: JSON schema validation

- Pipeline: Contracts ingested → prompts applied → results logged & validated

Result:

- 90%+ accuracy in extracting effective dates, payment terms, and governing law.

- Review time reduced from 3 hours per contract to under 15 minutes.

- Enabled downstream automation in compliance monitoring and financial risk scoring.

ShapeGartner (2024) estimates that 65% of enterprise contract management will be automated using AI-driven parsing within 3 years.

Prompt Engineering as a Developer’s Toolkit

Prompt engineering is not just a soft skill; it’s a form of programming with probabilistic functions. When used with care, prompts can turn generic LLMs into specialized agents for information extraction, task automation, reasoning and planning, conversation design and API orchestration. As models evolve, prompt engineering will remain foundational to controlling, directing, and aligning AI behavior with business goals. Partnering with a trusted Agentic AI and automation enabler can ensure a more strategic roadmap for faster ROI.

Got questions?

Connect with us todayFAQs

A prompt engineer designs the right instructions (prompts) to guide large language models like GPT-4 or Claude to give accurate, structured, and useful outputs. It’s like telling the model exactly what you want and how you want it.

ChatGPT itself isn’t prompt engineering, it’s the tool. Prompt engineering is how you talk to ChatGPT (or any LLM) in a structured way, so it follows instructions and gives reliable results.

Yes. As more businesses use LLMs, they need developers and teams who can make these models behave consistently. Prompt engineering has quickly become one of the most in-demand AI skills in tech

Absolutely. Even as AI models get smarter, they still need clear direction. Prompt engineering will continue to be the foundation for building reliable AI applications from document processing to multi-agent systems and will evolve into more advanced “AI programming.”